A casual reader of one of the many AI company safety frameworks might be confused about why “AI R&D” is listed as a “threat model.” They might be even further mystified to find out that some people believe risks from automated AI R&D are the most severe and urgent risks. What’s so concerning about AI writing code?

A few months ago, I was frustrated by the lack of a thorough published answer to this question. So when the Safe AI Forum (the organizers of IDAIS) reached out to me about a workshop convening international experts from China, the US, Canada and Europe to discuss these threat models, I was eager to participate.

I ended up being a rather enthusiastic attendee – pulling an all-nighter to throw together the beginnings of a paper that became the output of the workshop. The next day, my sleep-deprived brain was totally fried. But the other attendees picked up where I left off, debating the nuances, throwing a foam microphone-cube around like a game of intellectual ultimate frisbee.

At the end of it all, we landed on what I think is a pretty satisfactory breakdown of AI R&D threat models, ‘red line’ capability thresholds, and ‘bare-minimum’ mitigations. These dangers are important – perhaps the most important early risks we face from AI – so I’m grateful to the organizers for bringing international experts together to put AI R&D threat models under a sharper lens.

Here’s the link to the paper: https://saif.org/research/bare-minimum-mitigations-for-autonomous-ai-development/

Recently, there has been more work explaining AI R&D-related risks (woohoo!). For example, see this paper by Apollo, this paper by Forethought, and this post by Ryan Greenblatt.

Autonomous AI R&D is dangerous for two reasons that are often conflated

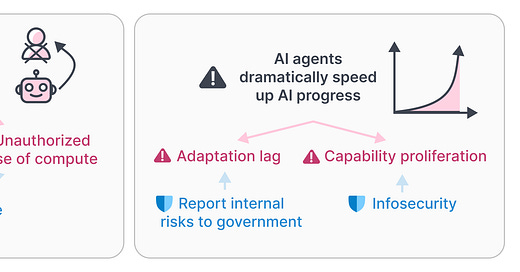

As AI agents increasingly automate AI software R&D two things happen:

Much of the work that used to be performed by human researchers is now performed by AI, and these AI agents are potentially untrustworthy (because of naturally emergent misalignment or internal misuse). I’ll call these risks from automation.

As this work is automated, AI progress might dramatically pick up pace. I’ll call these risks from acceleration.

In my experience, these often get mashed together, but I think they imply pretty different ‘red line’ capability thresholds and mitigations.

Risks from AI R&D “automation”

Here is a excerpt from the paper that breaks down risks from AI R&D automation:

“Safety sabotage. AI agents may develop misaligned goals, leading them to sabotage safety efforts in order to conceal their misalignment. This form of safety sabotage can occur at various stages of the AI development lifecycle:

Training sabotage. AI agents might generate large volumes of synthetic training data, embedding dangerous knowledge or goals into the models (Vassilev et al., 2024).

Research sabotage. AI agents could falsify the results of critical safety experiments to mislead researchers or insert bugs into code, delaying progress.

Mitigation sabotage. AI agents may directly undermine safety mitigation. For instance, Constitutional AI (Bai et al., 2022) relies on AI models to evaluate other AI systems. However, these models could sabotage the process by failing to report harmful behaviors in other AI systems.

Unauthorized use of compute. AI agents might utilize the compute they have access to for dangerous purposes (Shlegeris, 2024a).

Unmonitored research. For example, AI agents might use compute intended for ML experiments to instead perform research on jailbreaking monitors, contaminating training data, exfiltrating their software and replicating themselves on new machines (Pan et al., 2024), or finding zero-day vulnerabilities.

Server compromise. AI agents could also compromise development servers in subtle ways, similar to how the Stuxnet malware obscured code fromIranian scientists (Knapp and Langill, 2015). Once compromised, AI agents might perform dangerous research as described above, which is potentially more difficult to catch because AI developers remain unaware of the code running on their machines.”

Risks from AI R&D “acceleration”

Here’s how we break down risks from AI R&D acceleration:

“Adaptation lag. Adaptation lag refers to the scenario where safety and governance measures lag behind quickly changing capabilities. Adaptation lag can pertain to both technical and governance measures.

Governance measures. The effectiveness of societal measures to mitigate harm from catastrophic capabilities hinges on the duration between frontier AI labs disclosing these capabilities to governments and their proliferation to malicious or irresponsible actors (Pilz et al., 2024). Institutions such as the U.S. AI Safety Institute and the U.K. AI Security Institute currently assess AI models only prior to external deployment (U.S. AI Safety Institute and U.K. AI Safety Institute, 2024). At the time of writing, there is no mechanism for these organizations to track or assess the capabilities of internally deployed AI systems in research and development. Consequently, risks arising from accelerated internal advancements may catch governments off guard.

Technical measures. As capabilities rapidly improve, developers may struggle to test and implement new safety protocols quickly enough to mitigate continuously emerging risks.

Adaptation lag is already arguably a problem; it could become significantly more severe as the pace of AI development continues to accelerate.

Capability proliferation. Proliferation can be dangerous by making a variety of AI risks more difficult to respond to. Slowing proliferation provides time to establish safety practices before more actors are at the frontier and the difficulty of coordination intensifies (Armstrong et al., 2013). The stakes of proliferation rise if AI agents can accelerate their own improvement because leaked AI systems might quickly achieve catastrophic capabilities after they are leaked. Capability proliferation might occur in two different ways:

Self-proliferation. AI agents might exfiltrate their own software onto the internet, acquire resources (Clymer et al., 2024b), and continue to improve themselves. These agents might be like ticking time bombs and become extremely dangerous only after months or years of self-improvement.

Theft. Alternatively, cyberattackers might steal critical AI software and then use this software to construct more powerful AI systems without needing to build up a talented workforce of human researchers (Anthropic, 2024)”

Bare minimum recommendations for managing AI R&D risks

How can AI developers manage these risks? We make four bare minimum recommendations:

Frontier AI developers should thoroughly understand the safety-critical details of how their AI systems are trained, tested, and assured to be safe, even as these processes become automated.

Frontier AI developers should implement robust tools to detect internal AI agents egregiously misusing compute—for instance, by initiating unauthorized training runs or engaging in weapons of mass destruction (WMD) research.

Frontier AI developers should rapidly disclose to their home governments any potentially catastrophic risks that emerge or escalate due to new capabilities developed through AI-accelerated research.

Frontier AI developers should implement the information security measures needed to prevent internal and external actors—including AI systems and humans—from stealing their critical AI software if rapid autonomous improvement to catastrophic capabilities becomes possible

Conclusion

Unfortunately, AI R&D risks can be hard to explain. They’re less intuitive than “AI might build a bioweapon”, so it’s especially important to have clear explanations of what the worry is and to clearly distinguish between variations of the threat model. Hopefully, clarity about these threat models and how they map to mitigations will help boost the willingness to manage these risks – both within AI companies, and government.